Ever wondered how computers can spot and sort images so well? It’s thanks to deep learning, a key part of machine learning. This tech has changed how we understand images. Let’s explore how deep learning is making computers better at seeing and understanding pictures.

For a long time, computers struggled to identify and sort images. But deep learning changed everything. It uses neural networks, like the human brain, to recognize images. These networks have layers that help them learn and get better at recognizing pictures.

Computer vision is all about teaching machines to see and understand images. Deep learning has been a big help here. It lets computers learn from data, making them much better at recognizing images than before.

Key Takeaways

- Deep learning has revolutionized image recognition by enabling computers to learn features directly from data.

- Neural networks, inspired by the human brain, consist of input, hidden, and output layers, and deep neural networks with multiple hidden layers excel at image recognition tasks.

- The field of computer vision aims to enable machines with the ability to perceive and understand visual data, and deep learning has been a game-changer in this domain.

- Deep learning models have surpassed traditional image recognition methods in terms of accuracy, flexibility, and performance.

- The ImageNet dataset, with over 14 million human-annotated images, has been a crucial resource for training deep learning models in image recognition.

Understanding Image Recognition and Computer Vision

Computer vision is a field that deals with many tasks. These include image classification, object detection, and semantic segmentation. Image classification sorts images into different groups. Object detection finds and names objects in an image. Semantic segmentation goes further by pinpointing specific pixels for each object.

The growth of image recognition tech comes from deep learning and more training data. As AI advances, it’s getting better at understanding images like we do. Deep learning models now analyze images with high accuracy, mimicking human vision.

Key Components of Computer Vision

- Optical Character Recognition (OCR) technology is key for turning printed texts into digital data. This helps in making documents editable and searchable.

- Facial recognition is used for identity checks, making security systems and biometric apps more effective.

- Iris recognition is known for its accuracy in security. It uses iris patterns for precise identification.

The Evolution of Image Recognition Technology

The first digital photo scanner was invented in the 1950s. Since then, many new algorithms and models have been developed. Models like AlexNet and ImageNet have been game-changers in the field.

Relationship Between AI and Visual Processing

AI and visual processing work together for tasks like image tagging and object detection. Deep learning models, trained on large datasets, have made computer vision systems more accurate and efficient.

Challenges like image variations and occlusions have pushed the field to improve. New data collection and training methods are being developed. Computer vision is set to see even more progress in the future.

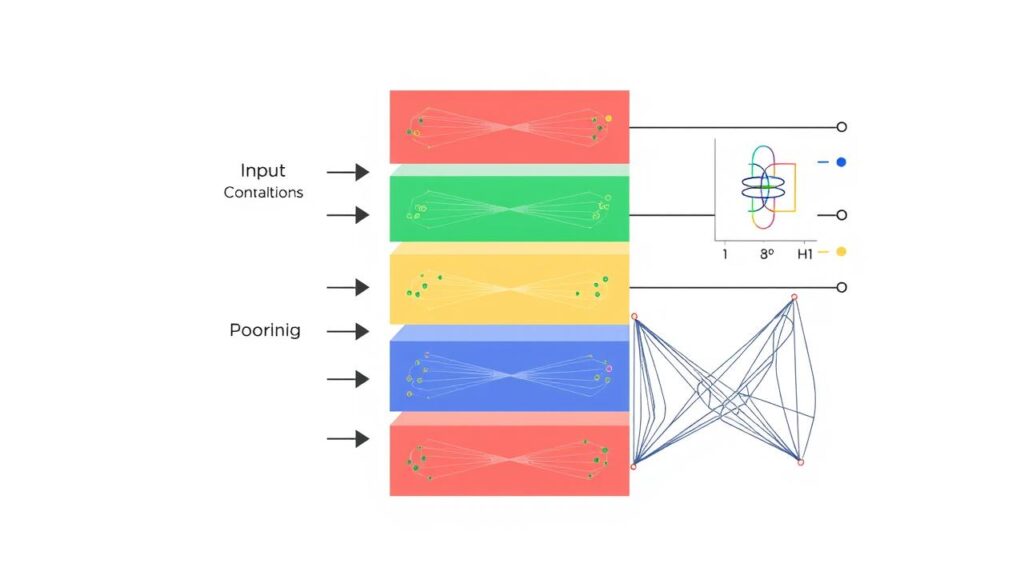

The Architecture of Deep Neural Networks

Deep learning is a big part of artificial intelligence that has changed how we recognize images. At its core are deep neural networks, made up of many layers of artificial neurons. These networks take in data, find important features, and make predictions.

A deep neural network has an input layer, hidden layers, and an output layer. The input layer gets the raw data, like an image. The hidden layers then find and learn complex features from this data. These features build a hierarchy, with each layer spotting more abstract patterns.

The number of hidden layers affects how well a network can predict things. Networks with more layers can make better predictions but need more data and computing power to train.

| Layer Type | Purpose |

|---|---|

| Input Layer | Receives the raw input data, such as an image |

| Hidden Layers | Responsible for feature extraction and learning hierarchical representations |

| Output Layer | Generates the final predictions or decisions based on the learned features |

The design of deep neural networks is key to their success in image recognition. The neural network layers work together to spot complex patterns. This lets them make accurate predictions.

Models like Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) are great at image tasks. They use artificial neurons and clever designs to understand images better than ever before.

How Deep Learning Is Used in Image Recognition

Deep learning has changed image recognition a lot. Since 2012, deep learning algorithms have been better than old methods. They can now classify images with less than 5% error, even beating humans sometimes.

Feature Hierarchy in Deep Learning Models

Deep learning’s success in image recognition comes from learning features from data itself. It doesn’t need humans to pick features. It starts with simple visual features and builds up to complex ones.

Training Process and Data Requirements

Training deep learning models for image recognition needs lots of images. This supervised learning lets the model learn from these images. Big datasets and better digital imaging have helped a lot.

Role of GPU Processing in Deep Learning

GPUs have made training and using deep learning models faster. They make image recognition work in real-time. This is great for many uses, like facial recognition and medical imaging.

Deep learning has changed image recognition a lot. It uses supervised learning, feature extraction, and GPUs to do better than old methods. This has opened up new areas like security, medical imaging, and self-driving cars.

Convolutional Neural Networks (CNNs) in Image Recognition

Convolutional Neural Networks (CNNs) are now the top choice for image recognition. They excel at understanding the spatial details in images. This makes them perfect for many computer vision tasks.

At the heart of a CNN are convolutional layers and pooling layers. The convolutional layers use filters to spot local features and create feature maps. Pooling layers then shrink these maps, focusing on the most important details. Together, they help identify objects and patterns in images.

| Metric | Value |

|---|---|

| Accesses | 500k |

| Citations | 2674 |

| Altmetric | 86 |

CNNs have greatly improved how well images are recognized. They can even beat humans in some tasks like image classification and medical imaging. This is because they learn from data automatically, without needing human input.

CNNs have 5 to 25 layers, each important for recognizing patterns. The convolutional layers extract features, while pooling layers reduce the size of these maps. The final layers then classify or predict based on these features.

The success of CNNs in image recognition is clear. They excel in visual tasks and learn important features on their own. As deep learning grows, we’ll see more amazing uses of CNNs in computer vision and beyond.

Data Preparation and Model Training

Training deep learning models for image recognition starts with careful data preparation. This includes image preprocessing and data augmentation. Each step is vital for a reliable and high-performing model.

Image Preprocessing Techniques

One key step is data normalization. We standardize pixel values to a range of 0 to 1. This makes the model train faster and perform better.

Another important step is image resizing. We resize images to a specific size for the model. This ensures the model can process visual information effectively.

Data Augmentation Strategies

To prevent overfitting, we use data augmentation. Techniques like shearing and flipping expand the training dataset. This exposes the model to more visual patterns.

By doing this, the model becomes better at recognizing new images. It improves its ability to classify images accurately.

Training Dataset Requirements

A robust image recognition model needs a large, diverse dataset. It should have at least 1,000 images per label. The images should be of high quality and well-annotated.

It’s also important to have a balanced dataset. This avoids biases and ensures the model performs well across all categories.

With careful data preparation and effective training, we can create deep learning models that excel in image recognition. These models have many applications across different industries.

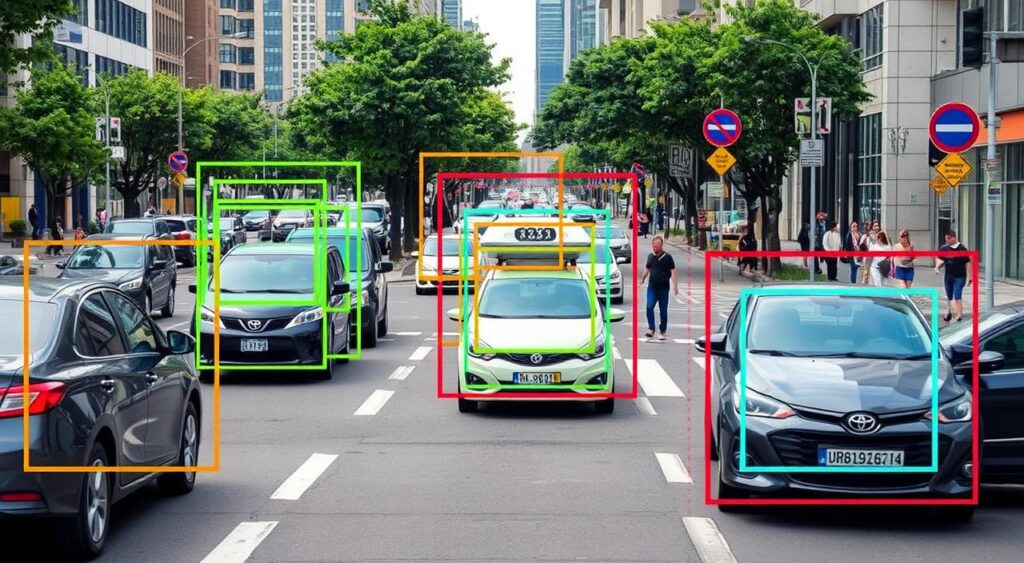

Image Classification and Object Detection Techniques

In computer vision, we have two main tasks: image classification and object detection. Image classification labels an entire image with one tag. Object detection finds and labels many objects in one frame. These tasks have grown stronger with deep learning and big datasets.

Bounding boxes are key in object detection. They show where objects are in an image, helping us track them. Also, multi-class classification models can sort images into many categories at once. This gives us a deeper look at what’s in the image.

- Bounding boxes: Delineate the position of detected objects within an image.

- Multi-class classification: Classify images into multiple categories at once.

Algorithms like YOLO and SSD have made object detection faster and more accurate. They’re used in self-driving cars, surveillance, and robots. These systems need to spot and act on objects quickly.

“The advancements in object detection and image classification have unlocked a wealth of possibilities in various industries, from autonomous driving to smart surveillance. By accurately identifying and localizing objects in real-time, these techniques are transforming the way we interact with and interpret our visual world.”

As computer vision grows, combining image classification and object detection will be crucial. They will help create new AI and deep learning applications.

Transfer Learning in Image Recognition

Transfer learning is a key technique in image recognition. It uses pre-trained models to tackle new tasks with less data. This method speeds up model adaptation and boosts performance, especially when data is hard to find.

Pre-trained Models and Their Applications

Models like VGG-16 have been trained on huge datasets, like ImageNet. This dataset has over a million images across 1,000 categories. These models are great for model adaptation and domain-specific training because they can extract features well.

For example, VGG-16 can classify images into 1,000 categories with great accuracy.

Fine-tuning Strategies for Specific Tasks

To use pre-trained models for new tasks, we fine-tune them. We adjust their weights to fit a specific dataset or problem. This usually means freezing lower layers and training the top layers on the new data.

In a test, we used transfer learning to classify fruit images with a modified VGG-16 model. We had 150 images per fruit and reached 92% accuracy after 5 training epochs. This shows how powerful transfer learning is, even with little data.

“Transfer learning enables faster model development and improved performance, especially when working with limited domain-specific data. It is particularly useful in medical imaging, where large annotated datasets may be scarce.”

By using pre-trained models and fine-tuning, we can fully utilize model adaptation, domain-specific training, and feature extraction in image recognition. This leads to more efficient and effective solutions in many areas.

Real-world Applications and Use Cases

Image recognition powered by deep learning has changed our daily lives. It’s now a key part of many industries. From healthcare to security, and e-commerce to agriculture, it’s making a big difference.

In healthcare, it helps analyze medical images like X-rays and MRI scans. This leads to better disease diagnosis and treatment plans. Also, in transportation, it’s crucial for self-driving cars to detect objects and navigate safely.

Facial recognition is used in security and authentication. It makes access control and identification easier. Social media uses it for content moderation and personalized recommendations, making our online experience safer and more fun.

The retail industry uses image recognition for visual searches. Customers can find products by showing images, not just typing. It also helps in quality control and managing inventory in manufacturing.

In agriculture, image recognition helps monitor crops and detect pests and diseases early. It also optimizes farming practices. As this technology improves, we’ll see even more ways it changes our world.

| Industry | Application | Key Benefits |

|---|---|---|

| Healthcare | Medical Imaging Analysis | Improved disease diagnosis and treatment planning |

| Autonomous Vehicles | Object Detection and Navigation | Enhanced safety and autonomous driving capabilities |

| Security | Facial Recognition | Efficient access control and identification |

| Retail | Visual Search and Product Recommendations | Improved customer experience and sales |

| Agriculture | Crop Monitoring and Disease Detection | Enhanced farming productivity and sustainability |

The global image recognition market is growing fast. We’ll see more amazing uses of deep learning and computer vision. It will change healthcare and how we interact with the world, with endless possibilities.

“The true impact of image recognition will be realized when it becomes deeply embedded in our daily lives, seamlessly enhancing our experiences and solving real-world problems.”

Conclusion

Deep learning has changed how computers see and understand images. It’s getting better fast, with new ideas and ways to use it. We’re looking forward to even more advanced tech in the future.

But, we also need to think about the ethics of this tech. Privacy and fairness are big concerns. Yet, deep learning’s growth opens up new chances in fields like medicine and self-driving cars.

We’re all about making tech better while keeping ethics in mind. By tackling challenges and exploring new ideas, we can make a big difference. Deep learning will change how we see and interact with the world.